How we redesigned candidate screening and increased search adoption by 73.80% and filter usage by 14x

Summary

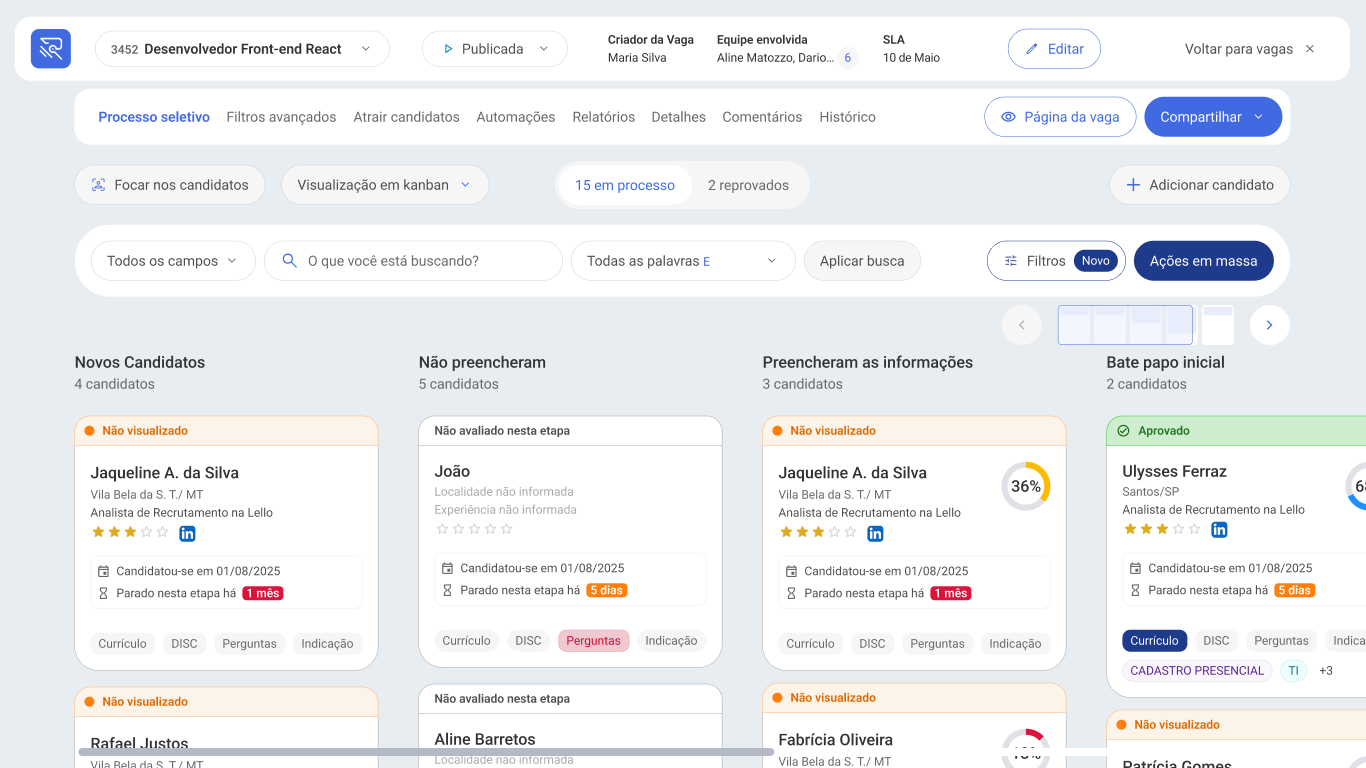

Recruiters were spending most of their day "fighting" with the screening screen: to find the right candidates, they had to navigate through huge lists, filter everything manually, and remember configurations in their heads.

Because of this, filters were rarely used, search barely helped, and the screening flow didn't make it clear what the next step should be, resulting in slow, repetitive, and inconsistent screenings among recruiters, increasing support tickets with questions about how search and filters worked.

Based on usage data and interviews, I mapped this daily routine, identified key friction points, and redesigned the screening screen as a true decision dashboard: contextual search aligned with recruiter vocabulary, smarter and more predictable filters, cards highlighting only critical information, and clear candidate states.

After the redesign, search and filters became part of the real screening routine: there was a 73.8% increase in search usage, 14x more filter usage, and a reduction of about 26% in average screening time per session, making the process faster, predictable, and sustainable for the recruitment team.

Beyond the numbers, recruiters reported that it became much easier to understand what stage each candidate is in and to set up appropriate filters for each job.

Design process

Discovery

- Desk Research

- Benchmark

- Heuristic Evaluation

- Usability Testing of existing experience

- Quantitative Research

Ideation

- Opportunity Tree

- Wireframes

Development

- Interactive Prototypes

- Usability Testing

Delivery

- Documentation

- Handoff

Challenge

Very low usage of filters and search

Recruiters were avoiding using the ATS filters and search, precisely the functionality that should speed up their work.

Many support tickets

The growing volume of support tickets signaled something beyond simple technical questions.

Quantitative Research Results

Usage Frequency

Result Accuracy

Lack of trust in results

Through assisted usability tests, quantitative research, and heuristic analysis, we discovered the core of the problem: users did not trust the presented results.

Lack of clarity on applied filters

The interface did not make it clear which filters were applied, generating doubts like 'am I losing qualified candidates?' and 'are these results really filtered?'.

Identified in usability tests

Not used due to lack of trust

Users do not use filters or search because they don't find them useful or don't understand how they work.

Impact: Underutilized resources may indicate failure to meet user needs.

Filter function is unclear

The source filter and name-only search caused confusion as it wasn't clear which options were available.

Impact: Users might avoid using this filter and search due to lack of clarity.

Recruiters took up to 3x

longer to screen candidates

The result?

An essential functionality for effective recruitment was being underutilized, forcing recruiters to manually scroll through endless lists of candidates, exactly what filters should avoid.

Making the invisible visible

The solution was to turn uncertainty into clarity

We completely rethought the search component and filters, creating a system where users could know exactly what they were filtering at any moment, and importantly, trust the presented results.

Made with AI

Low-fidelity interactive prototype for testing the new solution

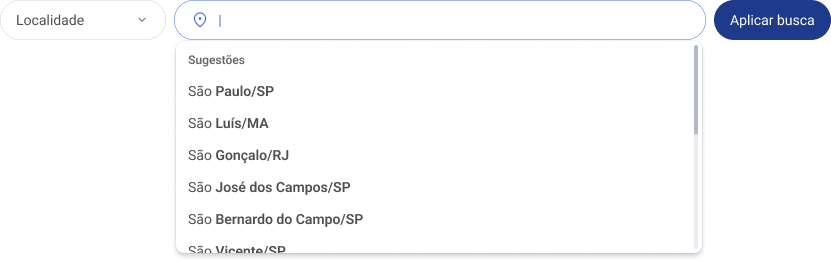

Contextual Search Field

Users showed difficulty with AND/OR conditionals in search, generating frustration when results didn't match expectations.

So we simplified the flow:

Search by Name

Automatic AND for full names

Search by Location

Automatic OR for full names and autocomplete

Search by All Fields

OR and AND as search options

The technical complexity remains,

but now behind the scenes.

Result: 73.8% increase in search usage.

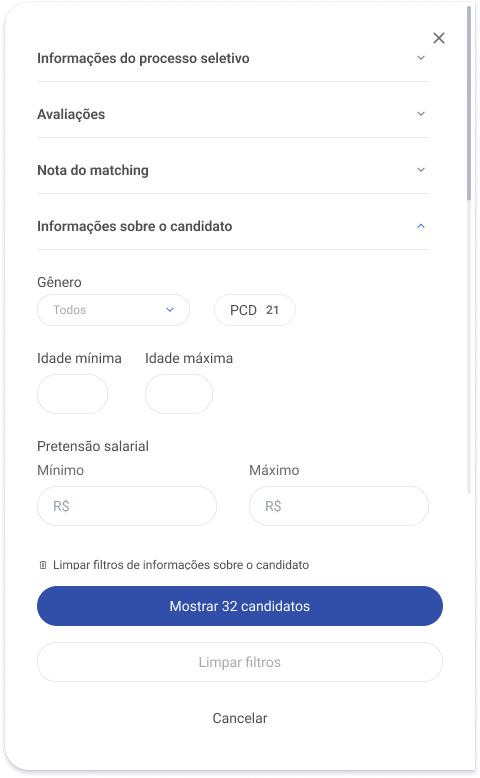

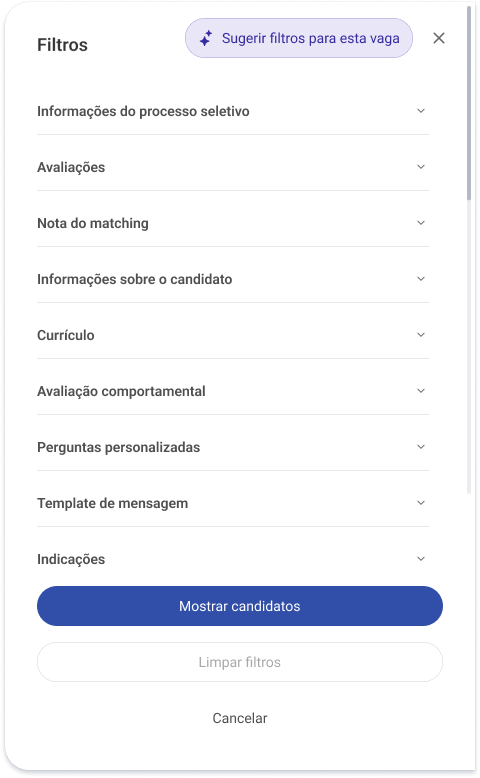

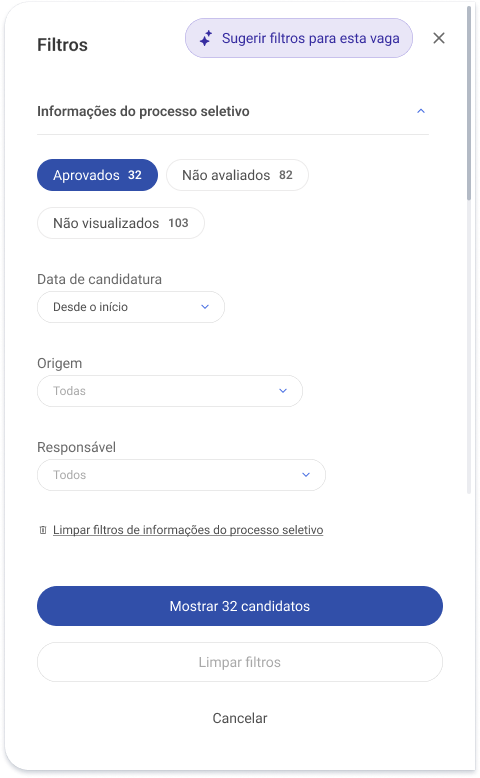

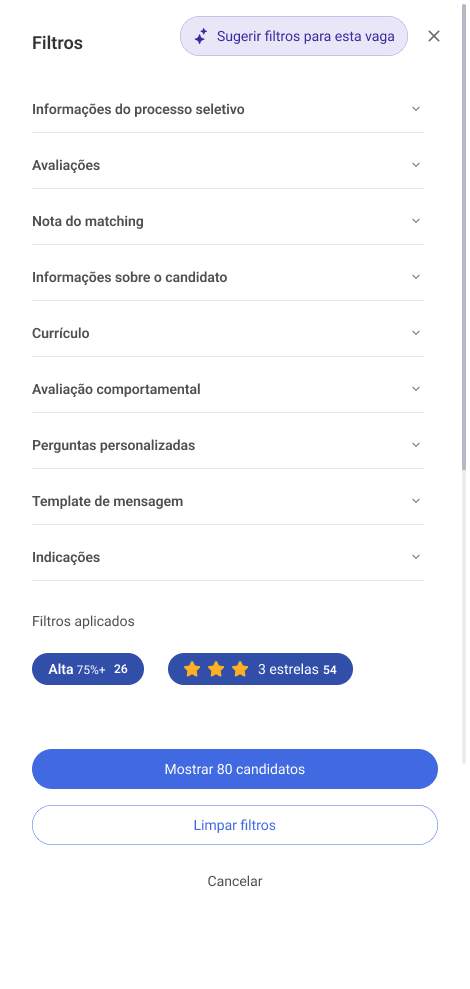

Screening Filters

Recruiters found filters that didn't reflect the real screening context: little control, unpredictable results, and 'empty' searches. So we redesigned the experience: expanded truly useful options, organized everything into clear categories, and added counters. Now, the impact of each choice is immediately visible.

New Filters

We included some candidate information filters such as:

- Gender

- Age

- Salary Expectation

Categories

To improve information architecture, we organized filters by categories:

- Process Information

- Evaluations

- Matching Score

- Resume

- Behavioral Assessment

- Custom Questions

Filter Counters

To ensure result predictability and avoid 'empty' searches, we created counters to show the result before searching in 2 places:

- On each filter

- On the show results button

AI to Speed Up Screening

Besides new options, we created a way to further optimize screening with our AI via a button to suggest filters based on job specifications.

Logic became invisible and choice, evident: clear categories, new criteria, counters, one-click AI = optimized screening

Result: filters received 14x more interactions.

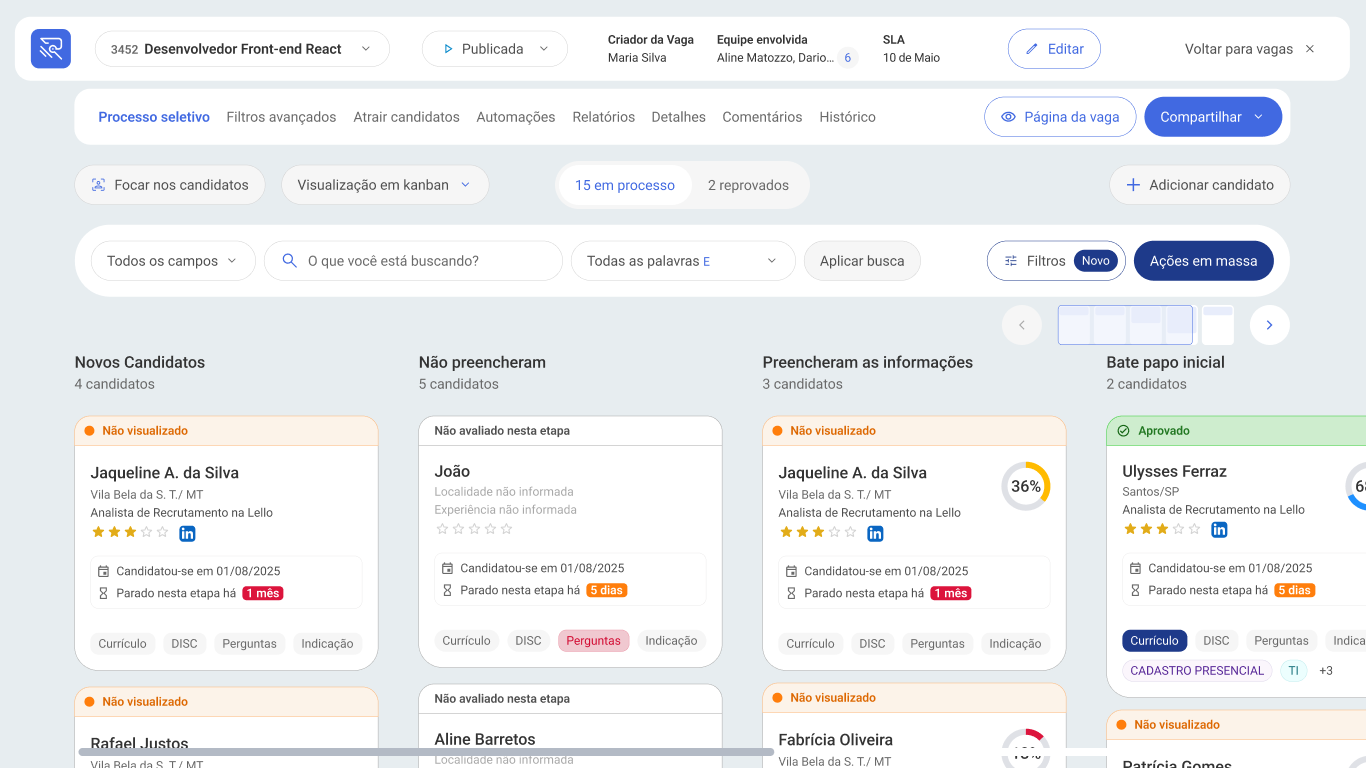

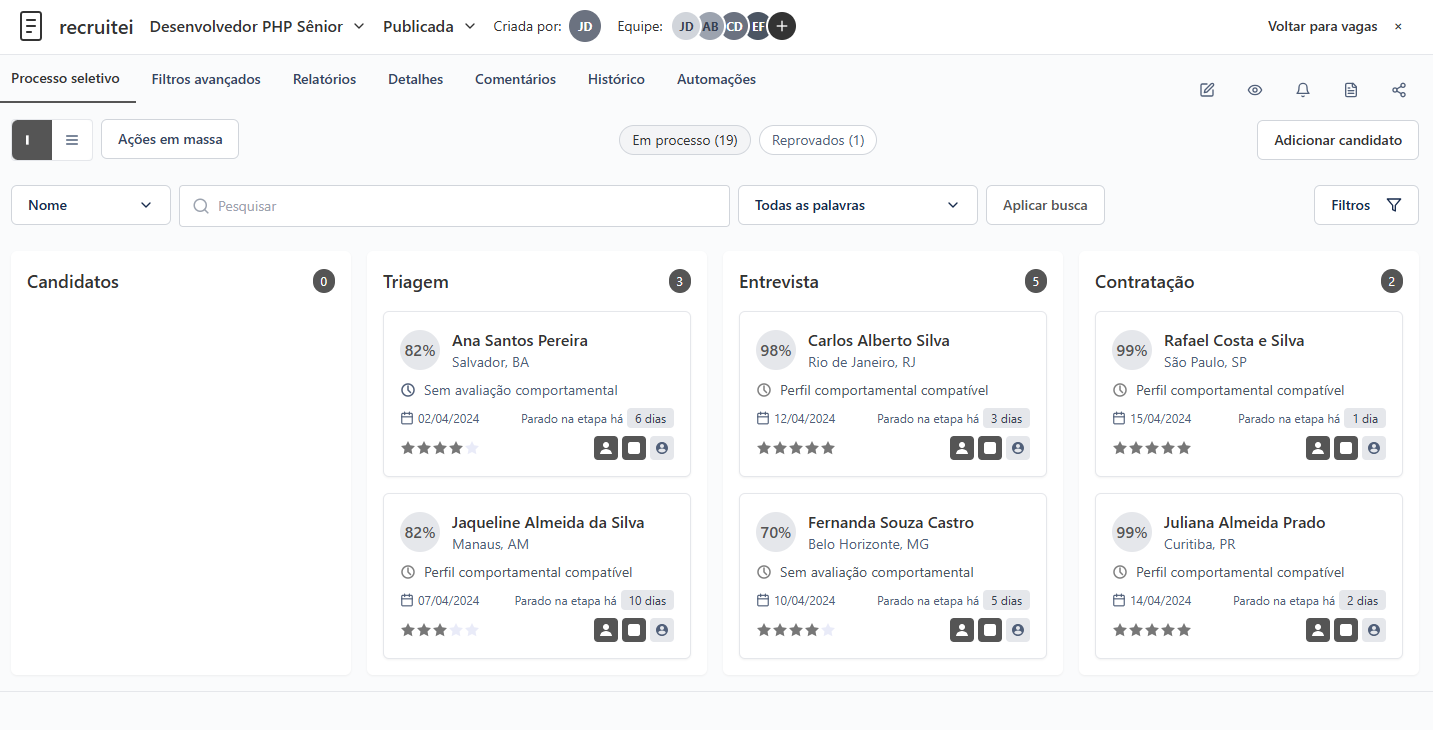

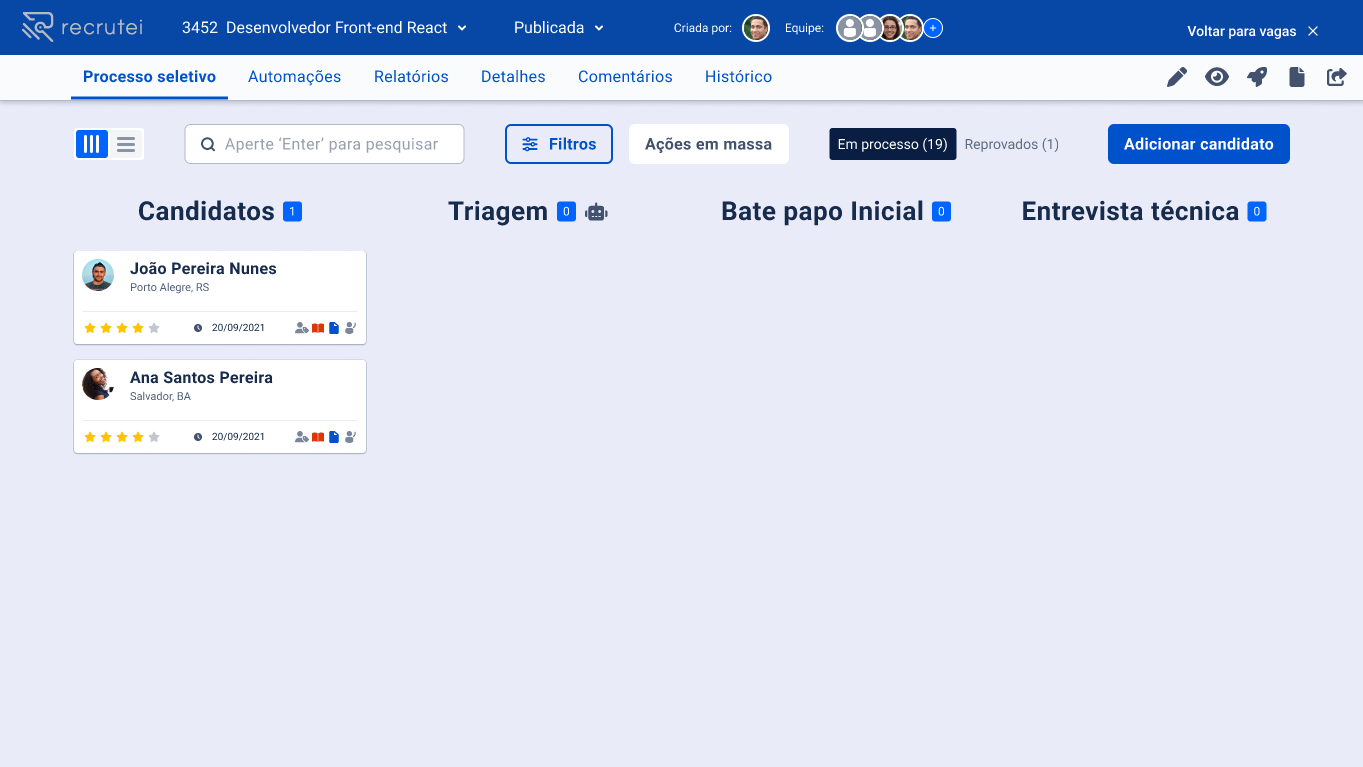

Redesign

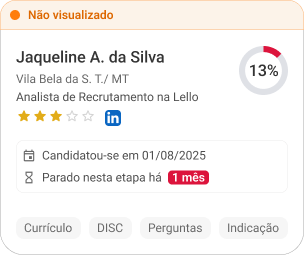

Additionally, we redesigned the entire screen to align it with the new system and strengthen interface credibility.

Before

After

Antes

Depois

New Components

Search Field

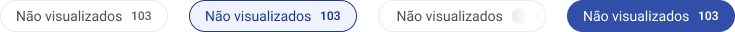

Filter Chip with Counter

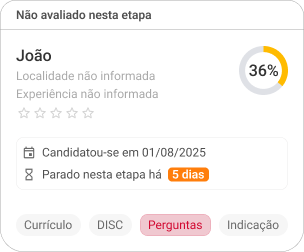

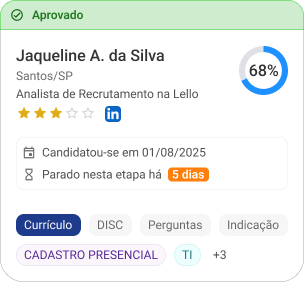

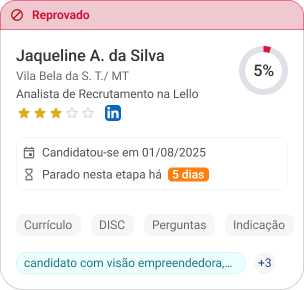

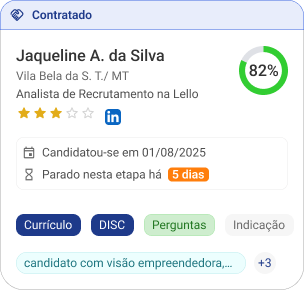

New Candidate Cards

Launch Trade-off

Despite potential value, we decided not to include the AI filter suggestion function in the first release.

Usage estimates indicated high token consumption, generating a recurring cost incompatible with the product margin at that time.

We opted to prioritize the redesign of manual search and filters, solutions with low operational cost and high immediate impact, leaving AI automation planned as a roadmap evolution.

Results

Results showed a clear impact: 73.80% increase in search component usage and greater perceived platform value, proving improvements made functionality more useful and relevant for recruiters.

Besides search, screening filters also saw a major leap: usage of this essential tool for effective screening went from 4 to 57 clicks in the same period — over 14x more interactions.

This growth shows the new flow made filters more accessible and relevant in recruiters' daily lives, helping decision-making with more speed and precision.

Success Metrics

search usage rate

more filter interactions

Data calculated from monitoring with Clarity tool

What we heard from recruiters

""Before I got lost in statuses, now I glance and know where each candidate is.""

""The combination of search and filters felt much more natural. I can quickly get to only the candidates that really make sense.""

""Screening became more fluid. I no longer need to keep going back and forth between screens to make decisions.""

Representative quotes based on verbal feedback received after launch.

Next Steps

During discovery of this feature, we identified that talent pool filters also needed redesign: the complexity of these advanced filters hindered usage causing usability issues. So for next sprints we should work on Talent Pool screens and flows.

Also, we conceived an AI automatic filter version, but opted not to include it in the first release due to high token costs, which would make the solution unviable today. This resource remains on the roadmap to be re-evaluated when cost per use is more sustainable.